NoSQL Database Connectors

NoSQL Database Connectors provide powerful integration capabilities with various NoSQL databases, enabling flexible data storage and retrieval for diverse data models. These connectors support document stores, key-value stores, wide-column stores, and graph databases, making them versatile tools for handling unstructured and semi-structured data in your RAG applications.

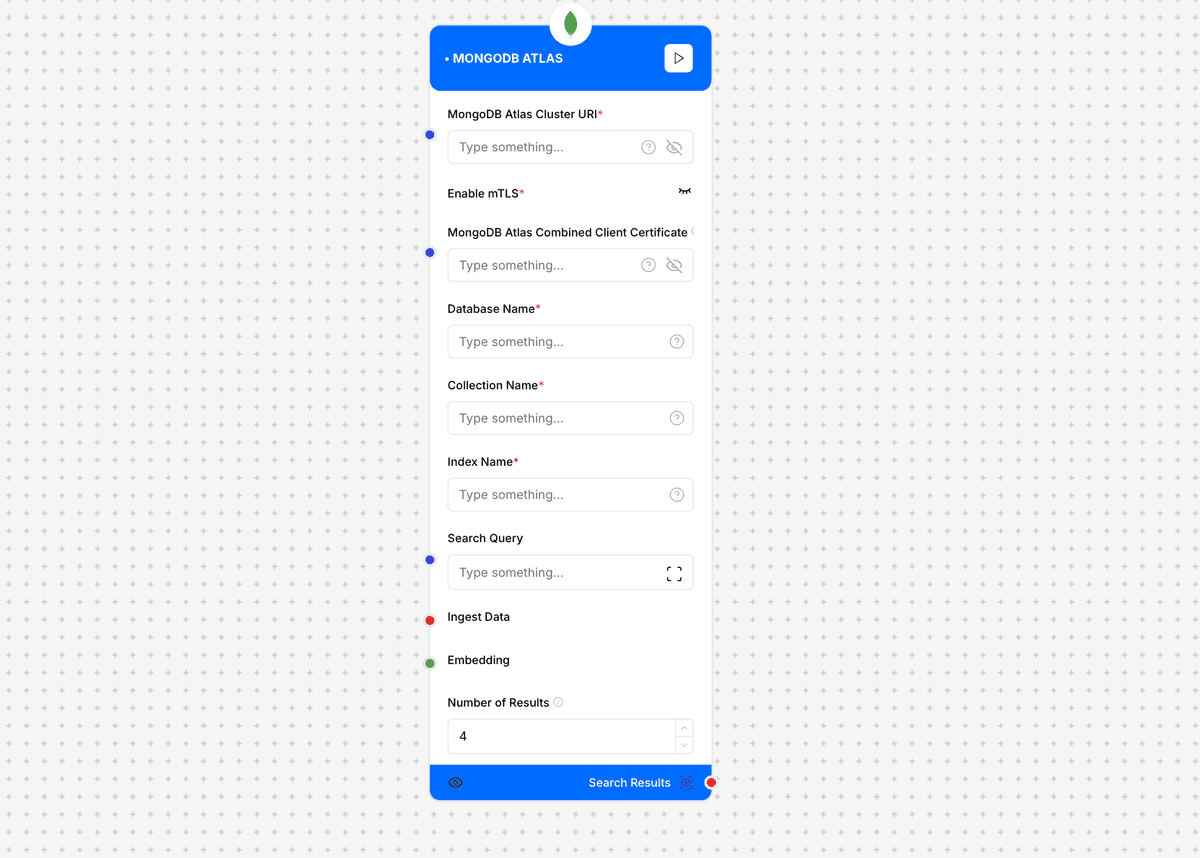

1.1 MongoDB Atlas

MongoDB Atlas Connector Interface

Description

The MongoDB Atlas connector enables seamless integration with MongoDB's cloud database service. This connector provides robust capabilities for working with document-based data models, supporting complex queries, aggregations, and full-text search operations while ensuring enterprise-grade security and scalability.

Use Cases

- Storing and querying unstructured document data

- Building flexible content management systems

- Implementing real-time analytics pipelines

- Managing user profiles and preferences

- Handling IoT data streams and time-series data

Inputs

- MongoDB Atlas Cluster URI: Connection string (required)

Example: mongodb+srv://username:password@cluster.mongodb.net

- Enable mTLS: TLS/SSL configuration (optional)

Example: true

- Combined Client Certificate: Authentication certificate (optional)

Example: /path/to/certificate.pem

- Database Name: Target database (required)

Example: myapp_production

- Collection Name: Target collection (required)

Example: users

- Index Name: Search index identifier (optional)

Example: default

Outputs

The connector returns query results and operation metadata in JSON format.

Example Output:

{

"status": "success",

"operation_info": {

"timestamp": "2024-03-15T14:30:22Z",

"operation_type": "find",

"documents_processed": 100

},

"results": [

{

"_id": "65f4a2b8e234d12345678901",

"username": "john_doe",

"email": "john.doe@example.com",

"profile": {

"firstName": "John",

"lastName": "Doe",

"age": 30,

"interests": ["technology", "sports"]

},

"created_at": "2024-02-20T08:15:30Z"

}

],

"metadata": {

"index_used": "email_1",

"execution_time_ms": 45,

"documents_returned": 1,

"documents_scanned": 1

}

}Implementation Notes

- Use appropriate index strategies for query optimization

- Implement proper error handling for network issues

- Consider using connection pooling for better performance

- Monitor database operations and query performance

- Implement proper retry mechanisms for resilience

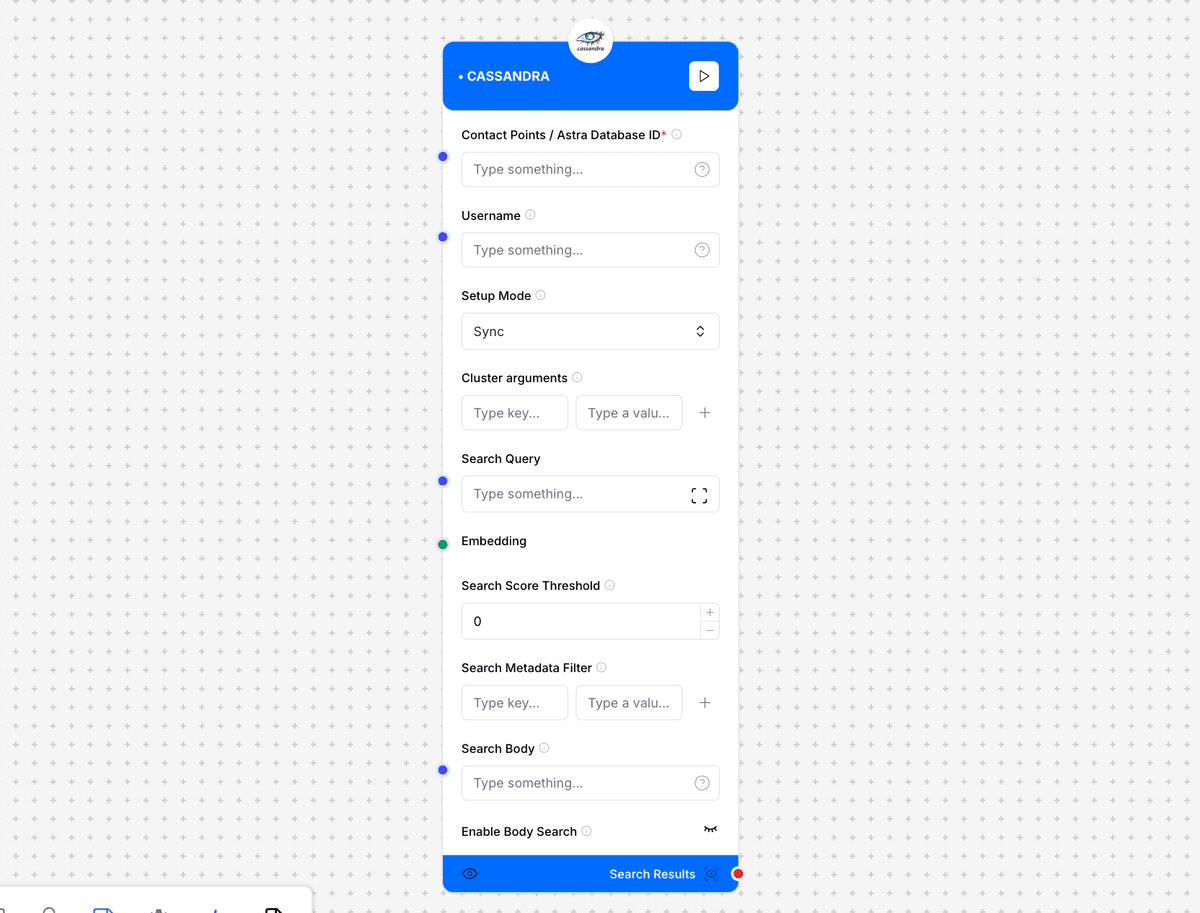

1.2 Cassandra

Cassandra Connector Interface

Description

The Cassandra connector provides integration with Apache Cassandra and DataStax Astra DB, enabling high-performance, distributed data storage and retrieval. This connector is optimized for handling large-scale, distributed datasets with high availability and fault tolerance requirements.

Use Cases

- Managing time-series data at scale

- Handling high-throughput write operations

- Building distributed event logging systems

- Implementing real-time analytics

- Managing product catalogs and inventories

Inputs

- Contact Points / Astra Database ID: Connection endpoints (required)

Example: cluster1.company.com,cluster2.company.com

- Username: Authentication username (required)

Example: cassandra_user

- Password / AstraDB Token: Authentication credentials (required)

Example: AstraCS:KHhfdjksHFKJHf:73

- Keyspace: Database keyspace (required)

Example: analytics_keyspace

- Table Name: Target table (required)

Example: user_events

- TTL Seconds: Time-to-live for data (optional)

Example: 86400

- Batch Size: Processing batch size (optional)

Example: 100

Outputs

The connector returns query results and operation metadata in JSON format.

Example Output:

{

"status": "success",

"operation_info": {

"timestamp": "2024-03-15T15:45:30Z",

"consistency_level": "LOCAL_QUORUM",

"operation_type": "select"

},

"results": [

{

"event_id": "uuid-1234-5678",

"user_id": "user123",

"event_type": "page_view",

"timestamp": "2024-03-15T15:44:22Z",

"properties": {

"page": "/products",

"referrer": "google.com",

"device": "mobile"

}

}

],

"metadata": {

"coordinator_node": "node1.dc1",

"execution_time_ms": 25,

"rows_fetched": 1,

"partition_key_accessed": "user123"

}

}Implementation Notes

- Design partition keys for optimal data distribution

- Choose appropriate consistency levels for operations

- Implement proper retry policies for network issues

- Monitor cluster health and performance metrics

- Use prepared statements for better performance

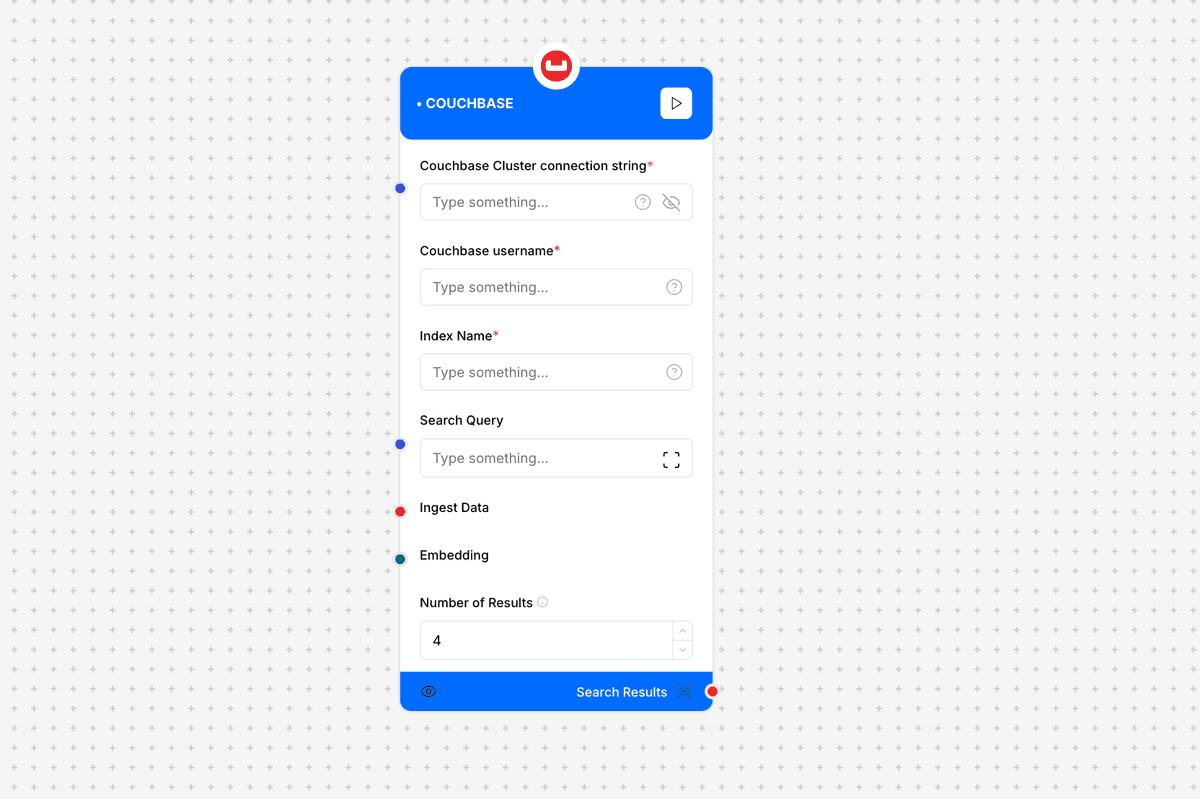

1.3 Couchbase

Couchbase Connector Interface

Description

The Couchbase connector enables integration with Couchbase Server, providing high-performance document database capabilities with built-in caching and SQL-like query language (N1QL). This connector combines the flexibility of JSON documents with the power of memory-first architecture for fast data operations.

Use Cases

- Building real-time web applications

- Managing user sessions and profiles

- Implementing caching layers for performance

- Handling document-based data models

- Creating scalable gaming applications

Inputs

- Cluster Connection String: Connection URL (required)

Example: couchbase://localhost

- Username: Authentication username (required)

Example: admin

- Password: Authentication password (required)

Example: ••••••••

- Bucket Name: Target bucket (required)

Example: app_data

- Scope Name: Target scope (optional)

Example: inventory

- Collection Name: Target collection (optional)

Example: products

- N1QL Query: SQL-like query for data retrieval (optional)

Example: SELECT * FROM app_data WHERE type = 'product'

Outputs

The connector returns query results and operation metadata in JSON format.

Example Output:

{

"status": "success",

"operation_info": {

"timestamp": "2024-03-15T16:20:15Z",

"operation_type": "n1ql_query",

"bucket": "app_data"

},

"results": [

{

"id": "product_123",

"type": "product",

"name": "Gaming Laptop",

"price": 1299.99,

"specifications": {

"cpu": "Intel i7",

"ram": "16GB",

"storage": "512GB SSD"

},

"last_updated": "2024-03-14T08:30:00Z"

}

],

"metadata": {

"execution_time_ms": 35,

"result_count": 1,

"scan_consistency": "request_plus"

}

}Implementation Notes

- Use appropriate scan consistency levels for queries

- Implement connection pooling for better performance

- Consider using GSI indexes for query optimization

- Monitor bucket and cluster metrics

- Handle failover scenarios appropriately

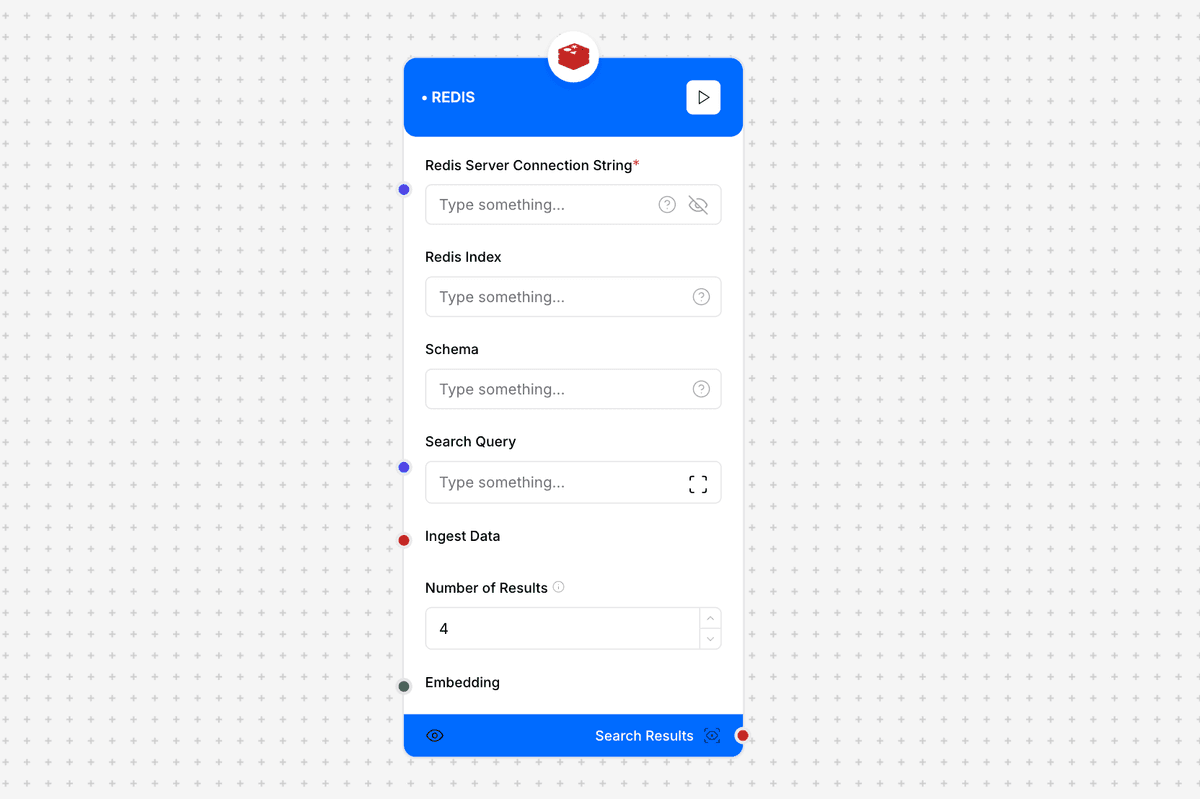

1.4 Redis

Redis Connector Interface

Description

The Redis connector enables integration with Redis, an in-memory data structure store that can be used as a database, cache, message broker, and queue. This connector provides high-performance operations with support for various data structures and Redis modules like RediSearch and RedisJSON.

Use Cases

- Implementing high-performance caching

- Building real-time leaderboards

- Managing session data

- Creating pub/sub messaging systems

- Handling rate limiting and queuing

Inputs

- Redis Connection String: Connection URL (required)

Example: redis://localhost:6379

- Password: Authentication password (optional)

Example: ••••••••

- Database Number: Redis database number (optional)

Example: 0

- Key Pattern: Pattern for key operations (optional)

Example: user:*

Outputs

The connector returns operation results in JSON format.

Example Output:

{

"status": "success",

"operation_info": {

"timestamp": "2024-03-15T17:10:45Z",

"operation_type": "get",

"key": "user:123"

},

"result": {

"id": "123",

"username": "john_doe",

"last_login": "2024-03-15T16:45:30Z",

"session_data": {

"token": "xyz789",

"expires_in": 3600

}

},

"metadata": {

"key_type": "hash",

"ttl": 3600,

"memory_usage": "1.2KB"

}

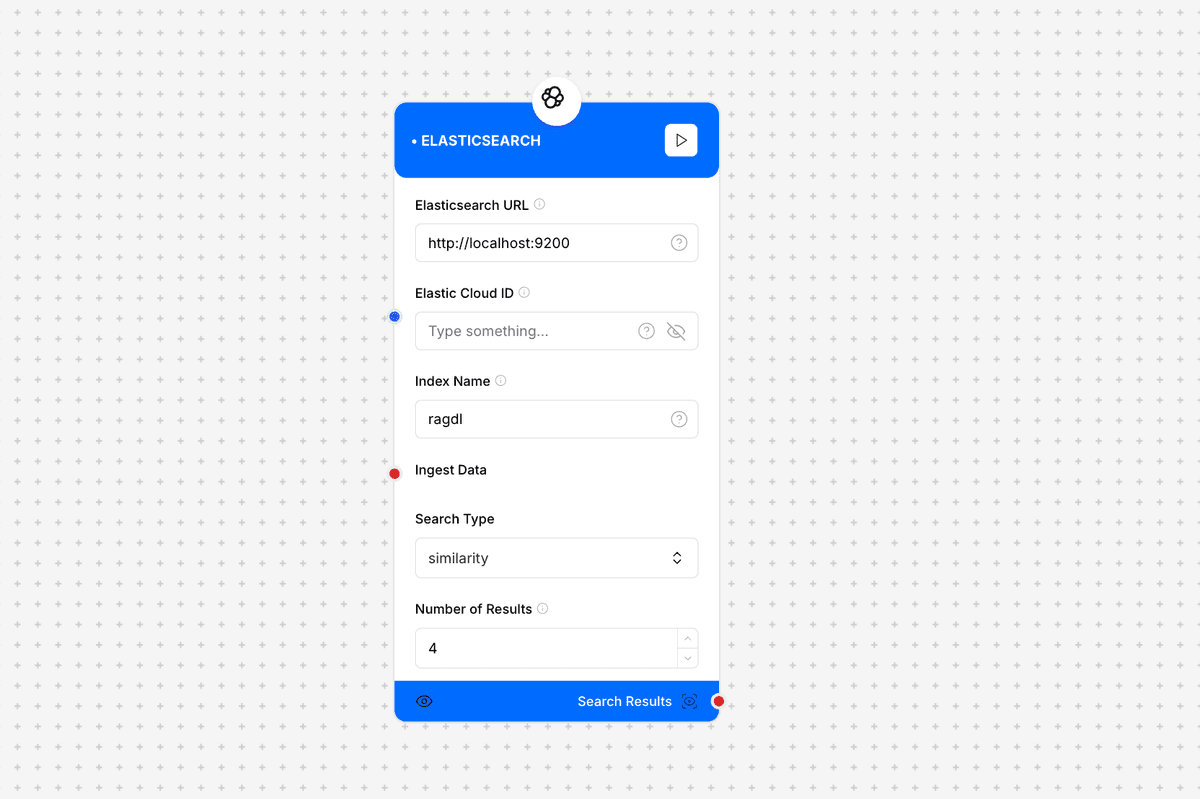

}1.5 Elasticsearch

Elasticsearch Connector Interface

Description

The Elasticsearch connector provides integration with Elasticsearch, a distributed search and analytics engine. This connector enables powerful full-text search capabilities, along with complex aggregations and analytics on structured and unstructured data.

Use Cases

- Implementing full-text search functionality

- Building log analysis systems

- Creating advanced analytics dashboards

- Managing document search and retrieval

- Handling time-series data analysis

Inputs

- Elasticsearch URL: Server endpoint (required)

Example: https://elasticsearch:9200

- Index Name: Target index (required)

Example: products

- Username: Authentication username (optional)

Example: elastic

- Password: Authentication password (optional)

Example: ••••••••

- API Key: API authentication (optional)

Example: es_api_key_123

Outputs

The connector returns search results and metadata in JSON format.

Example Output:

{

"status": "success",

"operation_info": {

"timestamp": "2024-03-15T17:45:30Z",

"operation_type": "search",

"index": "products"

},

"hits": {

"total": {

"value": 1,

"relation": "eq"

},

"hits": [

{

"_index": "products",

"_id": "123",

"_score": 1.0,

"_source": {

"name": "Wireless Headphones",

"description": "High-quality wireless headphones with noise cancellation",

"price": 199.99,

"category": "Electronics",

"tags": ["wireless", "audio", "bluetooth"]

}

}

]

},

"metadata": {

"took": 5,

"timed_out": false,

"shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

}

}

}Implementation Notes

- Design proper mapping for optimal search

- Use appropriate analyzers for text fields

- Implement index lifecycle management

- Monitor cluster health and performance

- Consider using bulk operations for efficiency

Important Implementation Considerations:

- Choose the appropriate NoSQL database based on your data model and access patterns

- Implement proper security measures including encryption and access control

- Monitor performance metrics and implement appropriate scaling strategies

- Use connection pooling and proper error handling for reliability

- Follow each database's best practices for optimal performance

- Regularly backup data and implement disaster recovery procedures