LM Studio Embeddings

Generate embeddings using LM Studio's local model server. Perfect for running embeddings locally with a user-friendly interface and support for multiple open-source models with complete data privacy.

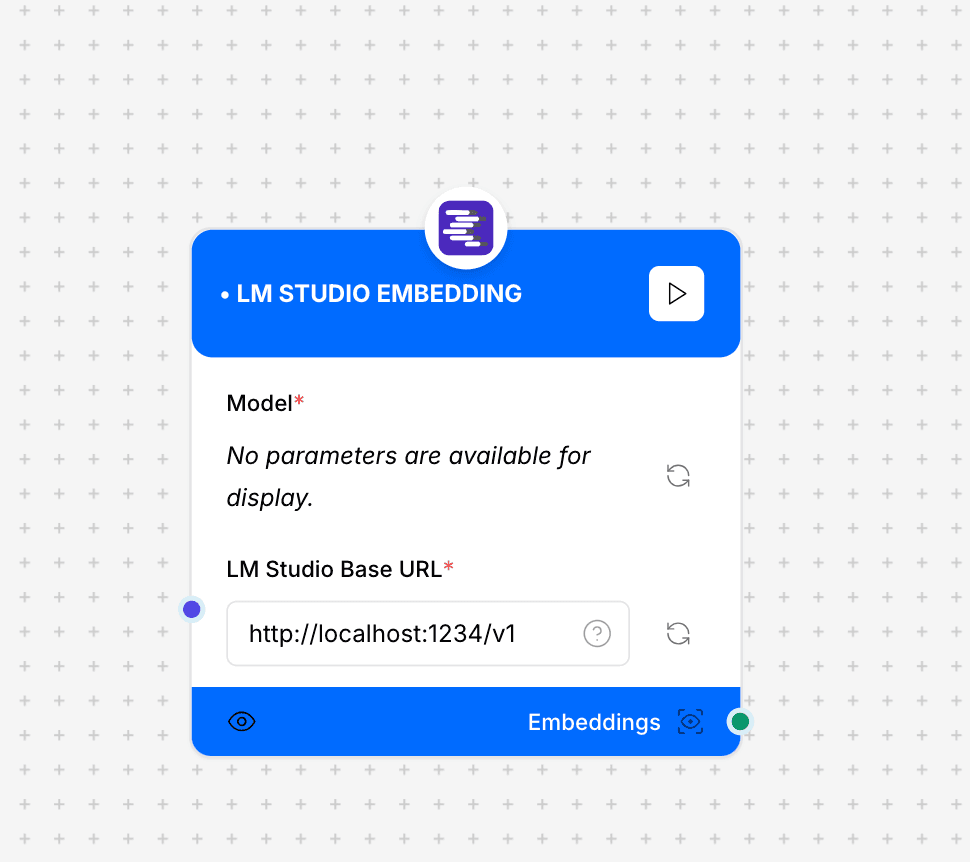

LM Studio Embeddings component interface and configuration

LM Studio Requirement: This component requires the LM Studio application to be installed and running with a local server enabled. Make sure you have loaded a compatible embedding model in LM Studio before using this component.

Component Inputs

- LM Studio Base URL: The URL of your local LM Studio server

Example: "http://localhost:1234/v1"

- LM Studio API Key: API key (if configured in LM Studio)

Example: "lmstudio-xyz123" or empty string if not using authentication

- Temperature: Controls randomness in embedding generation

Example: 0.0 (Default, recommended for consistent embeddings)

- Input Text: Text content to convert to embeddings

Example: "This is a sample text for embedding generation."

Component Outputs

- Embeddings: Vector representation of the input text

Example: [0.024, -0.015, 0.056, ...]

- Dimensions: Size of the embedding vector

Example: Varies based on the model loaded in LM Studio (typically 768, 1024, or 4096)

- Metadata: Additional information about the embedding process

Example: model_info: all-MiniLM-L6-v2, temperature: 0.0, processing_time: 135

Model Selection

Compatible Models

LM Studio supports various embedding models that can be downloaded and used locally

- Sentence Transformers (all-MiniLM-L6-v2, all-mpnet-base-v2)

- E5 Models (e5-small, e5-base)

- BGE Models (bge-small-en, bge-base-en)

- Any other embedding model compatible with LM StudioModel Considerations

When selecting a model in LM Studio, consider these factors:

- Model Size: Smaller models load faster but may produce lower quality embeddings

- RAM Requirements: Larger models need more system memory

- Vector Dimensions: Different models produce different embedding sizes

- Language Support: Some models are English-only, others are multilingualImplementation Example

// Basic configuration

const embedder = new LMStudioEmbeddor({

lmStudioBaseUrl: "http://localhost:1234/v1",

lmStudioApiKey: "your-api-key"

});

// Configuration with temperature

const customEmbedder = new LMStudioEmbeddor({

lmStudioBaseUrl: "http://localhost:1234/v1",

lmStudioApiKey: "your-api-key",

temperature: 0.7

});

// Generate embeddings

const result = await embedder.embed({

input: "Your text to embed"

});

// Batch processing

const batchResult = await embedder.embedBatch({

inputs: [

"First text to embed",

"Second text to embed"

]

});

console.log(result.embeddings);Use Cases

- Privacy-Focused Applications: Generate embeddings without sending data to external APIs

- Local Development: Test and prototype embedding-based applications without API costs

- Offline Environments: Create embeddings in environments without internet access

- Model Comparison: Test different embedding models using LM Studio's user-friendly interface

- Educational Projects: Learn about embeddings without subscription requirements

Best Practices

- Verify the LM Studio server is running before attempting to generate embeddings

- Pre-load models in LM Studio to avoid delays when making the first request

- Monitor system resource usage, especially when using larger models

- Use appropriate batch sizes to balance throughput and memory usage

- Implement error handling for connection issues with the local server