1. Amazon Connectors

These Amazon connectors enable your workflow to seamlessly integrate with AWS cloud services for retrieving, processing, and utilizing data in your RAG applications.

1.1 DynamoDB

DynamoDB Connector Interface

Description

The DynamoDB connector provides direct access to Amazon DynamoDB tables for read and write operations. It enables you to query, scan, and manipulate NoSQL data stored in DynamoDB's highly scalable document storage service.

Use Cases

- Retrieving user profiles and preferences for personalized experiences

- Storing and accessing session data for stateful applications

- Managing high-throughput transactional data

- Building applications requiring single-digit millisecond response times

- Handling schema-flexible data with varying attributes

Inputs

- AWS Access Key ID: Your AWS access key for authentication (required)

Example: AKIAIOSFODNN7EXAMPLE

- AWS Secret Access Key: Your AWS secret key for authentication (required)

Example: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

- AWS Region Name: The AWS region where your DynamoDB is hosted (required)

Example: us-east-1

- DynamoDB Table Name: Name of the DynamoDB table to access (required)

Example: SessionTable

- Session ID: Unique identifier for tracking sessions (optional)

Example: user-123456

- User Message: Message content to store (optional)

Example: {'query': 'How do I create a new account?'}

Outputs

JSON formatted data from the DynamoDB table that can be processed by subsequent nodes in your workflow.

Example Output:

{

"Items": [

{

"SessionId": "user-123456",

"Timestamp": "2023-05-10T14:30:22Z",

"UserQuery": "How do I create a new account?",

"ResponseId": "resp-789012",

"Metadata": {

"source": "chat",

"intent": "account_creation"

}

}

],

"Count": 1,

"ScannedCount": 1

}Implementation Notes

- For optimal performance, use partition keys in queries whenever possible

- Consider provisioned capacity for predictable workloads and on-demand for variable traffic

- Be mindful of DynamoDB's item size limit (400KB per item)

- Use sparse indexes and projections to minimize costs

1.2 AWS OpenSearch

AWS OpenSearch Interface

Description

The AWS OpenSearch connector enables querying and manipulation of data stored in Amazon OpenSearch Service (formerly Amazon Elasticsearch). This connector leverages OpenSearch's powerful search and analytics capabilities to perform text searches, semantic queries, and vector similarity operations on large datasets.

Useful Links

Use Cases

- Full-text search across large document collections

- Vector similarity search for semantic matching

- Log analysis and real-time monitoring

- Operational analytics on semi-structured data

- Natural language processing applications

Inputs

- OpenSearch URL: Endpoint URL of your OpenSearch domain (required)

Example: https://search-mydomain-abcdefg123456.us-east-1.es.amazonaws.com

- Index Name: Name of the index to query or write to (required)

Example: document-index

- Search Type: Type of search to perform (required)

Options: similarity, keyword, hybrid

- Search Input: Query string for text search (required)

Example: "cloud computing security practices"

- Ingest Data: JSON data to index (optional)

Example: {'title': 'Cloud Security', 'content': 'Best practices for securing cloud environments...'}

- Embedding: Vector data for similarity search (optional)

Example: [0.12, -0.45, 0.83, ...]

- Number of Results: Maximum number of results to return (optional)

Default: 10

- Search Score Threshold: Minimum relevance score (optional)

Default: 0.0

Outputs

Search results containing matching documents and relevance scores.

Example Output:

{

"took": 12,

"hits": {

"total": {

"value": 3,

"relation": "eq"

},

"hits": [

{

"_index": "document-index",

"_id": "doc-123",

"_score": 0.9247,

"_source": {

"title": "Cloud Security Best Practices",

"content": "When implementing security in cloud environments...",

"author": "Jane Smith",

"date": "2023-05-21"

}

},

{

"_index": "document-index",

"_id": "doc-456",

"_score": 0.8102,

"_source": {

"title": "Securing AWS Workloads",

"content": "This guide covers the essential security controls for AWS...",

"author": "John Doe",

"date": "2023-01-15"

}

}

]

}

}Implementation Notes

- Use AWS IAM for secure access control to your OpenSearch domain

- Configure appropriate analyzer settings for optimal text search

- Consider implementing k-NN indices for efficient vector search

- Use composite aggregations for complex analytics

- Implement index lifecycle policies for cost optimization

1.3 AWS SQL Schema Retriever

AWS SQL Schema Retriever Interface

Description

The AWS SQL Schema Retriever is designed to extract database schema information from RDS databases. This connector provides metadata about tables, columns, relationships, constraints, and other database objects without retrieving the actual data, making it ideal for database exploration and documentation.

Use Cases

- Database discovery and documentation generation

- Automatic creation of entity-relationship diagrams

- Schema comparison between different environments

- Database migration planning and validation

- Data lineage tracking for regulatory compliance

Inputs

- Database Endpoint: RDS database endpoint address (required)

Example: mydb.cluster-abcdefghijkl.us-east-1.rds.amazonaws.com

- Port: Database connection port (required)

Example: 5432 (PostgreSQL), 3306 (MySQL), 1521 (Oracle)

- Database User: Username with schema query permissions (required)

Example: schema_reader

- Database Password: Authentication password (required)

Example: ••••••••

- Database Name: Name of the database to analyze (required)

Example: production_db

- Database Type: Type of database engine (required)

Options: postgresql, mysql, oracle, sqlserver, mariadb

Outputs

Structured metadata about the database schema in JSON format.

Example Output:

{

"tables": [

{

"name": "customers",

"schema": "public",

"columns": [

{

"name": "customer_id",

"type": "integer",

"nullable": false,

"primary_key": true

},

{

"name": "name",

"type": "varchar(100)",

"nullable": false

},

{

"name": "email",

"type": "varchar(255)",

"nullable": true,

"unique": true

}

],

"indices": [

{

"name": "customers_pkey",

"columns": ["customer_id"],

"type": "PRIMARY KEY"

},

{

"name": "customers_email_idx",

"columns": ["email"],

"type": "UNIQUE"

}

]

},

{

"name": "orders",

"schema": "public",

"columns": [

{

"name": "order_id",

"type": "integer",

"nullable": false,

"primary_key": true

},

{

"name": "customer_id",

"type": "integer",

"nullable": false,

"foreign_key": {

"references": "customers",

"column": "customer_id"

}

}

]

}

]

}Implementation Notes

- Use database users with minimal privileges (information_schema access only)

- Schema analysis may be time-consuming for very large databases

- Different database engines expose schema information differently

- Consider caching schema data for frequently accessed databases

- Be aware of database-specific system tables and views for metadata

1.4 Glue Data Catalog

Glue Data Catalog Interface

Description

The Glue Data Catalog connector provides access to AWS Glue's central metadata repository. This connector allows you to discover, register, and organize metadata about your data assets across your organization, making it easier to find, query, and transform your data.

Use Cases

- Discovering and cataloging datasets across multiple data sources

- Building data lakes with organized metadata

- Managing ETL jobs and workflows

- Creating a unified view of enterprise data assets

- Automating data discovery for analytics and ML pipelines

Inputs

- AWS Access Key ID: AWS access key for authentication (required)

Example: AKIAIOSFODNN7EXAMPLE

- AWS Secret Access Key: AWS secret key for authentication (required)

Example: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

- AWS Region Name: AWS region for the Glue Data Catalog (required)

Example: us-east-1

- Glue Database Name: Name of the database in Glue Data Catalog (required)

Example: default

- AWS Region Name: AWS region for the catalog to search (required)

Example: us-east-1

- Message: Custom message or query (optional)

Example: "Find all tables with customer data"

Outputs

Metadata about tables, columns, partitions, and other data assets in JSON format.

Example Output:

{

"database": "marketing_data",

"tables": [

{

"name": "customer_interactions",

"createTime": "2023-03-15T14:22:18Z",

"tableType": "EXTERNAL_TABLE",

"location": "s3://marketing-data-lake/customer-interactions/",

"columns": [

{

"name": "customer_id",

"type": "string"

},

{

"name": "interaction_type",

"type": "string"

},

{

"name": "interaction_date",

"type": "timestamp"

},

{

"name": "channel",

"type": "string"

}

],

"partitionKeys": [

{

"name": "year",

"type": "int"

},

{

"name": "month",

"type": "int"

}

]

}

]

}Implementation Notes

- Use AWS Lake Formation for fine-grained access control to catalog resources

- Implement a consistent tagging strategy for better data organization

- Consider using Glue crawlers to automatically discover and catalog new data

- Integrate with AWS Athena for SQL-based querying of cataloged data

- Maintain schema versioning for evolving data structures

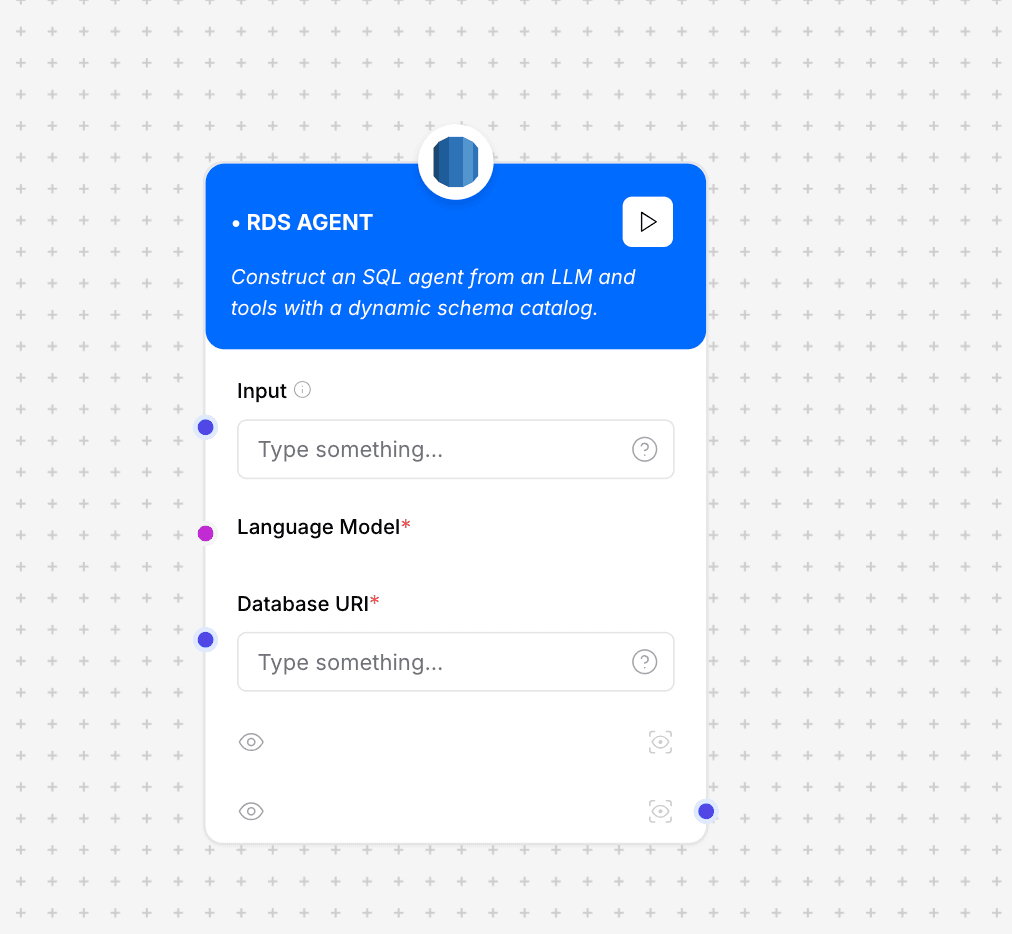

1.5 RDS Agent

Description

The RDS Agent connector makes it easy to manage and monitor RDS instances.

Use Cases

- Monitoring performance

- Automating backups

Inputs

- Input: User input (optional)

- Language Model: Language Model (LLM) to use

- Database URI: Database connection URI

RDS Agent Architecture

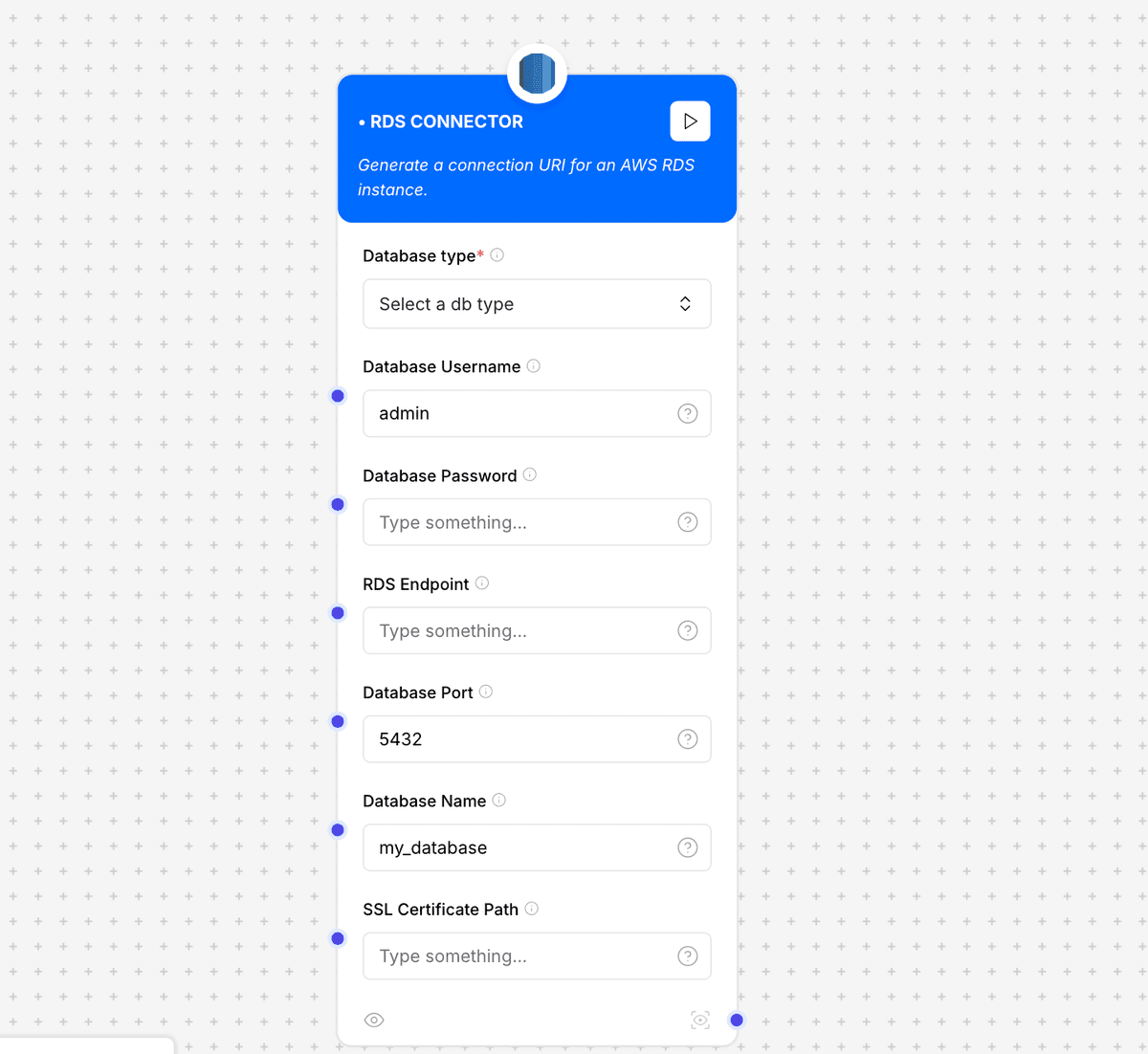

1.6 RDS Connector

Description

This connector allows you to connect to RDS relational databases and perform SQL queries.

Use Cases

- Data extraction and analysis

- Integration with other systems

Inputs

- Database Type: Database type (PostgreSQL, MySQL, etc.)

- Database Username: Username

- Database Password: Password

- RDS Endpoint: RDS server address

- Database Port: Connection port

- Database Name: Database name

- SSL Certificate Path: Path to the SSL certificate (optional)

RDS Connector Architecture

1.7 S3 Directory Loader

S3 Directory Loader Interface

Description

The S3 Directory Loader allows bulk retrieval of files from an entire directory (prefix) in an Amazon S3 bucket. This connector enables processing of multiple files in a single operation, making it ideal for batch document processing.

Use Cases

- Bulk ingestion of document collections for knowledge base creation

- Processing entire directories of log files for analysis

- Batch importing multiple data files into a processing pipeline

- Creating searchable archives from document collections

- Training AI models on directories of sample data

Inputs

- Access Key ID: Your AWS access key ID for authentication (required)

Example: AKIAIOSFODNN7EXAMPLE

- Secret Access Key: Your AWS secret access key for authentication (required)

Example: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

- Region: AWS region where your S3 bucket is located (required)

Example: us-east-1

- Bucket Name: Name of the S3 bucket containing your files (required)

Example: my-documents-bucket

- Prefix (Folder Path): Directory prefix within the bucket (required)

Example: quarterly-reports/2023/

- Silent Errors: Toggle for handling non-critical errors silently (optional)

Default: false

- Enable Parallel Processing: Process multiple files concurrently (optional)

Default: true

- Chunking Strategy: Method for breaking down large directories (optional)

Options: basic, adaptive

- Max Characters per Chunk: Character limit for each processing chunk (optional)

Example: 10000

Outputs

An array of processed files with their content and metadata.

Example Output:

[

{

"filename": "quarterly-reports/2023/Q1-financial-report.pdf",

"content": "First quarter financial results show a 12% increase in...",

"metadata": {

"contentType": "application/pdf",

"lastModified": "2023-04-15T09:31:22Z",

"size": 2451983

}

},

{

"filename": "quarterly-reports/2023/Q2-financial-report.pdf",

"content": "Second quarter financial results demonstrate continued...",

"metadata": {

"contentType": "application/pdf",

"lastModified": "2023-07-18T14:22:10Z",

"size": 2812456

}

}

]Implementation Notes

- For large directories, use pagination to process files in manageable batches

- Enable parallel processing for faster handling of multiple files

- Consider file types when processing; some formats may require specific extraction methods

- Implement error handling to continue processing despite individual file failures

Authentication & Security

- Always use IAM roles with least-privilege permissions when possible

- Store AWS credentials securely using environment variables or AWS Secrets Manager

- Rotate access keys regularly according to security best practices

- Enable encryption for data at rest and in transit

- Consider using VPC endpoints for services to enhance security

Best Practices

- Set up CloudWatch monitoring for AWS services to track usage and detect issues

- Implement proper error handling in your workflows to gracefully handle service disruptions

- Use AWS Cost Explorer to monitor and optimize expenses

- Consider regional data residency requirements when choosing AWS regions

- Leverage AWS Auto Scaling capabilities to handle varying workloads

Workflow Integration Tips

- Chain multiple AWS connectors to create comprehensive data pipelines

- Use transformer nodes after connectors to normalize data formats

- Implement caching strategies for frequently accessed but rarely changing data

- Consider batching operations when working with large datasets

- Use filter nodes to remove unnecessary data before processing

1.8 S3 File Loader

S3 File Loader Interface

Description

The S3 File Loader enables your workflow to retrieve files from Amazon S3 buckets. It can access documents, images, videos, and any file type stored in S3's scalable object storage service, making them available for processing in your RAG applications.

Use Cases

- Loading PDF documents, manuals, or technical specifications for knowledge extraction

- Accessing media files for content analysis or processing

- Retrieving datasets for model training or fine-tuning

- Processing large files that exceed traditional database size limits

- Building document archives with powerful searchable indices

Inputs

- Access Key ID: Your AWS access key ID for authentication (required)

Example: AKIAIOSFODNN7EXAMPLE

- Secret Access Key: Your AWS secret access key for authentication (required)

Example: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

- Region: AWS region where your S3 bucket is located (required)

Example: us-east-1

- Bucket Name: Name of the S3 bucket containing your files (required)

Example: my-document-bucket

- File Path: Path to the file within the bucket (required)

Example: documents/technical/manual.pdf

- Silent Errors: Toggle for hiding non-critical errors (optional)

Default: false

Outputs

File content or transformed data depending on file type.

For text files, the content is returned as plain text. For PDFs and other binary formats, the connector can extract text or provide the raw binary data for downstream processing.

Implementation Notes

- Organize files in logical folder hierarchies to improve management

- Use IAM roles and least-privilege principles when configuring S3 access

- Consider S3 transfer acceleration for large files in geographically distant regions

- Use S3 object tagging to categorize files for easier access patterns

1.9 S3 Bucket Uploader

S3 Bucket Uploader Interface

Description

The S3 Bucket Uploader enables your workflow to upload files to Amazon S3 buckets. This connector facilitates storing generated documents, processed data, or any content that needs to be persisted in cloud storage for later retrieval or sharing.

Use Cases

- Storing processed data for persistence

- Creating backups of workflow outputs

- Sharing generated documents with other systems

- Building data lakes from processed information

- Archiving chat histories or conversation logs

Inputs

- AWS Access Key ID: Your AWS access key for authentication (required)

Example: AKIAIOSFODNN7EXAMPLE

- AWS Secret Key: Your AWS secret key for authentication (required)

Example: wJalrXUtnFEMI/K7MDENG/bPxRfiCYEXAMPLEKEY

- Bucket Name: Name of the S3 bucket for upload (required)

Example: my-uploads-bucket

- Strategy for file upload: Method for handling file uploads (required)

Options: direct, multipart, presigned

- Data Inputs: The content to be uploaded (required)

Example: JSON objects, text, binary data

- S3 Prefix: Directory prefix for organizing uploads (optional)

Example: processed-data/2023/

Outputs

Upload confirmation and file URL for the stored content.

Example Output:

{

"status": "success",

"location": "https://my-uploads-bucket.s3.amazonaws.com/processed-data/2023/report-20230815.pdf",

"etag": ""6805f2cfc46c0f04559748bb039d69ae"",

"key": "processed-data/2023/report-20230815.pdf",

"bucket": "my-uploads-bucket"

}Implementation Notes

- Use multipart uploads for files larger than 100MB

- Consider setting appropriate content types for better browser handling

- Implement proper error handling for upload failures

- Use server-side encryption for sensitive data

1.10 RDS Oracle SQL Loader

RDS Oracle SQL Loader Interface

Description

The RDS Oracle SQL Loader enables your workflow to connect to and query Oracle databases hosted on Amazon RDS. This specialized connector provides support for Oracle-specific SQL syntax and features, allowing for seamless integration with existing Oracle database systems.

Use Cases

- Retrieving data from legacy Oracle enterprise systems

- Integrating with Oracle-based data warehouses

- Querying complex relational data with Oracle-specific functions

- Connecting to existing Oracle infrastructure in hybrid cloud deployments

- Supporting migration projects from Oracle to cloud-native databases

Inputs

- RDS Host: Hostname or endpoint of your Oracle RDS instance (required)

Example: myoracledb.abcdefghijkl.us-east-1.rds.amazonaws.com

- Port: Database connection port (required)

Default: 1521

- Username: Oracle database username (required)

Example: ADMIN

- Password: Oracle database password (required)

Example: ••••••••

- Service Name: Oracle service name (required)

Example: ORCL

- SQL Query: Oracle SQL query to execute (required)

Example: SELECT * FROM employees WHERE department_id = 50

- SSL Certificate Path: Path to SSL certificate for secure connections (optional)

Example: /path/to/certificate.pem

Outputs

JSON array of records representing the query results.

Example Output:

[

{

"EMPLOYEE_ID": 107,

"FIRST_NAME": "Diana",

"LAST_NAME": "Lorentz",

"EMAIL": "DLORENTZ",

"PHONE_NUMBER": "590.423.5567",

"HIRE_DATE": "2007-02-07T00:00:00.000Z",

"JOB_ID": "IT_PROG",

"SALARY": 4200,

"DEPARTMENT_ID": 50

},

{

"EMPLOYEE_ID": 115,

"FIRST_NAME": "Alexander",

"LAST_NAME": "Khoo",

"EMAIL": "AKHOO",

"PHONE_NUMBER": "515.127.4562",

"HIRE_DATE": "2003-05-18T00:00:00.000Z",

"JOB_ID": "IT_PROG",

"SALARY": 3100,

"DEPARTMENT_ID": 50

}

]Implementation Notes

- Ensure appropriate Oracle client libraries are available in your environment

- Oracle TNS configuration may be required for complex connectivity scenarios

- Consider using Oracle's RETURNING clause for data manipulation operations

- Be mindful of Oracle-specific date formats and data types

- Optimize queries with appropriate hints for large datasets

1.11 RDS SQL Loader

RDS SQL Loader Interface

Description

The RDS SQL Loader provides a generic SQL interface for connecting to various database engines hosted on Amazon RDS. This connector supports standard SQL queries and is designed to work with MySQL, MariaDB, and other compatible database systems.

Use Cases

- Retrieving structured data from relational databases

- Performing complex data joins across multiple tables

- Running aggregation queries for business intelligence

- Integrating with existing application databases

- Supporting data migration between different database systems

Inputs

- Database Connection URI: Connection string for your database (required)

Example: mysql://admin:password@mydb.cluster-abc123.us-east-1.rds.amazonaws.com:3306/mydb

- Database Username: Username for authentication (required)

Example: admin

- Database Password: Password for authentication (required)

Example: ••••••••

- Database Name: Name of the database to query (required)

Example: customer_database

- SQL Query: SQL query to execute (required)

Example: SELECT * FROM orders WHERE order_date > '2023-01-01' LIMIT 100

Outputs

JSON array containing the query results.

Example Output:

[

{

"order_id": 12345,

"customer_id": 101,

"order_date": "2023-01-15",

"total_amount": 129.99,

"status": "shipped"

},

{

"order_id": 12346,

"customer_id": 102,

"order_date": "2023-01-16",

"total_amount": 79.50,

"status": "processing"

}

]Implementation Notes

- Use prepared statements to prevent SQL injection attacks

- Limit result sets to avoid memory issues with large datasets

- Consider read replicas for query-intensive workloads

- Implement connection pooling for improved performance

- Include appropriate WHERE clauses to optimize query performance

1.12 RDS SQL Server Loader

RDS SQL Server Loader Interface

Description

The RDS SQL Server Loader is specialized for connecting to Microsoft SQL Server instances running on Amazon RDS. This connector supports SQL Server-specific features and T-SQL syntax to seamlessly integrate with existing Microsoft database environments.

Use Cases

- Enterprise data integration with Microsoft SQL Server databases

- Retrieving structured data using T-SQL specific features

- Supporting .NET-based application backends

- Integrating with legacy Microsoft database systems

- Executing complex stored procedures with multiple result sets

Inputs

- Database Connection URI: Connection string for your SQL Server (required)

Example: Server=mysqlserver.abcdef123456.us-east-1.rds.amazonaws.com,1433;Database=mydb;User Id=admin;Password=password;

- Username: SQL Server authentication username (required)

Example: admin

- Password: SQL Server authentication password (required)

Example: ••••••••

- Database Name: Name of the SQL Server database (required)

Example: Northwind

- SQL Query: T-SQL query to execute (required)

Example: SELECT TOP 10 * FROM Customers WHERE Region = 'WA'

- Trust Server Certificate: Whether to trust the server's SSL certificate (optional)

Default: false

Outputs

JSON array containing the query results from SQL Server.

Example Output:

[

{

"CustomerID": "ALFKI",

"CompanyName": "Alfreds Futterkiste",

"ContactName": "Maria Anders",

"ContactTitle": "Sales Representative",

"Address": "Obere Str. 57",

"City": "Berlin",

"Region": null,

"PostalCode": "12209",

"Country": "Germany",

"Phone": "030-0074321"

},

{

"CustomerID": "ANATR",

"CompanyName": "Ana Trujillo Emparedados y helados",

"ContactName": "Ana Trujillo",

"ContactTitle": "Owner",

"Address": "Avda. de la Constitución 2222",

"City": "México D.F.",

"Region": null,

"PostalCode": "05021",

"Country": "Mexico",

"Phone": "(5) 555-4729"

}

]Implementation Notes

- Configure proper encryption settings using the Encrypt parameter

- Use SQL Server-specific features like TOP instead of LIMIT

- Enable Always Encrypted for sensitive data

- Handle unicode data properly with N-prefixed strings for non-English content

- Consider using SQL Server's native XML support for complex structured data