LLM Category Evaluator

The LLM Category Evaluator is a specialized component that evaluates and categorizes text responses from language models based on predefined categories and criteria. It helps ensure responses align with expected categories and maintains quality control in LLM outputs.

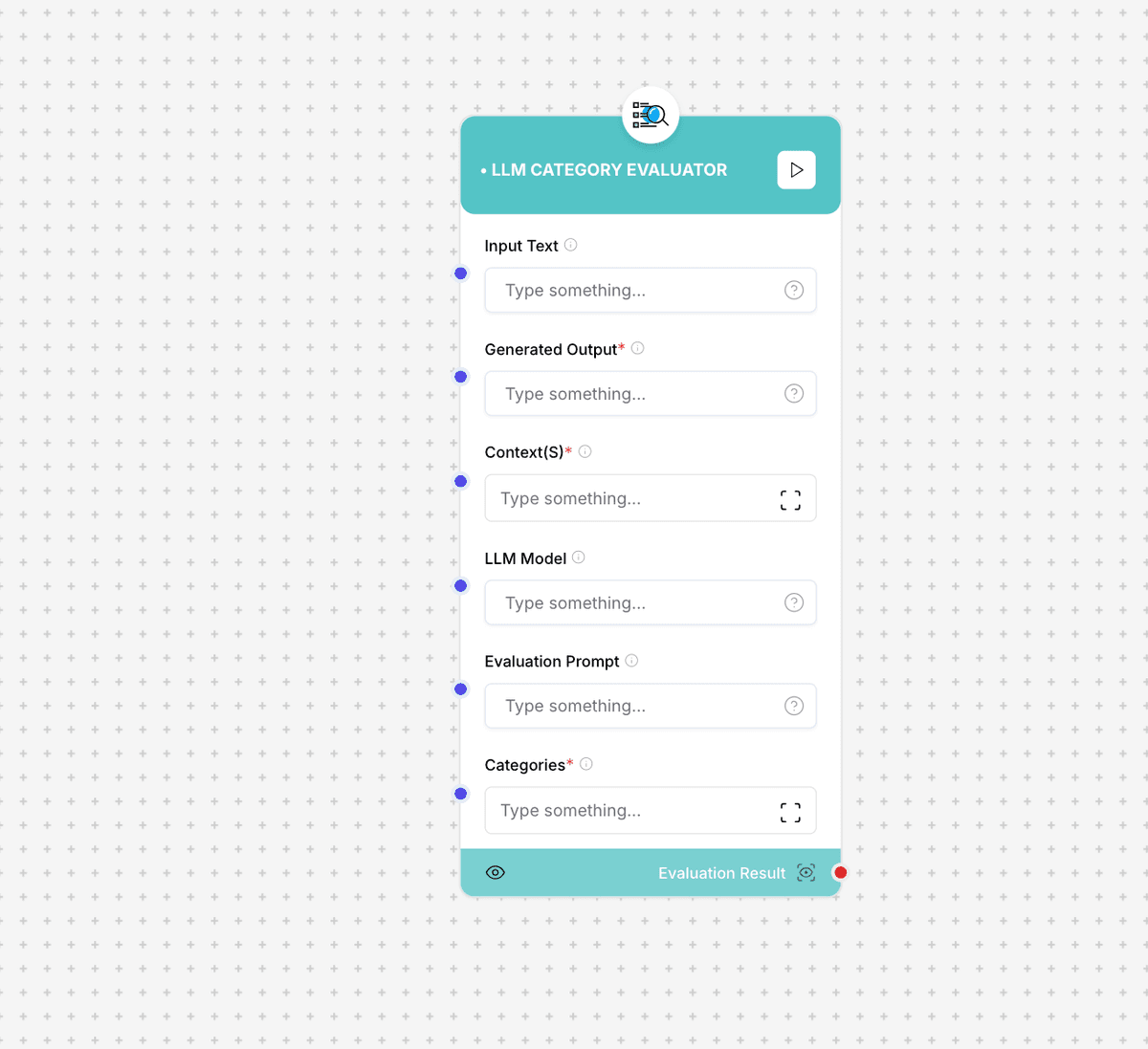

LLM Category Evaluator interface and configuration

Usage Note: Ensure that your categories are well-defined and mutually exclusive for optimal evaluation results. The evaluator requires clear category definitions to provide accurate assessments.

Component Inputs

- Input Text: The text to be evaluated

Example: "The response to be categorized"

- Generated Output: The model's generated text

Example: "Model's response for categorization"

- Context(s): Additional context for evaluation

Example: "Relevant context information"

- LLM Model: The language model to use for evaluation

Example: "gpt-4", "claude-2"

- Categories: List of possible categories

Example: ["technical", "non-technical", "unclear"]

Component Outputs

- Category Result: The determined category

Example: "technical"

- Confidence Score: Confidence in the categorization

Example: 0.95 (95% confidence)

- Explanation: Reasoning for the categorization

Detailed explanation of why the category was chosen

How It Works

The LLM Category Evaluator uses a sophisticated evaluation process to analyze and categorize text. It leverages the specified LLM to understand context, analyze content, and make informed category assignments.

Evaluation Process

- Input text and context analysis

- Category criteria matching

- Content evaluation against each category

- Confidence score calculation

- Category assignment

- Explanation generation

Use Cases

- Content Classification: Categorize responses by type or domain

- Quality Assurance: Verify response categories match expectations

- Response Validation: Ensure responses fit within desired categories

- Automated Sorting: Categorize large volumes of responses

- Content Filtering: Filter responses based on categories

Implementation Example

const categoryEvaluator = new LLMCategoryEvaluator({

inputText: "How do I configure a REST API endpoint?",

generatedOutput: "To configure a REST API endpoint...",

context: "Technical documentation context",

llmModel: "gpt-4",

categories: ["technical", "non-technical", "unclear"]

});

const result = await categoryEvaluator.evaluate();

// Output:

// {

// category: "technical",

// confidence: 0.95,

// explanation: "The response discusses technical API configuration..."

// }Best Practices

- Define clear and distinct categories

- Provide comprehensive context when available

- Use appropriate LLM models for your use case

- Regularly validate category definitions

- Monitor and adjust confidence thresholds