Azure OpenAI Models

A drag-and-drop component for integrating Azure OpenAI services into your workflow. Configure deployment settings and model parameters for enterprise-grade AI capabilities.

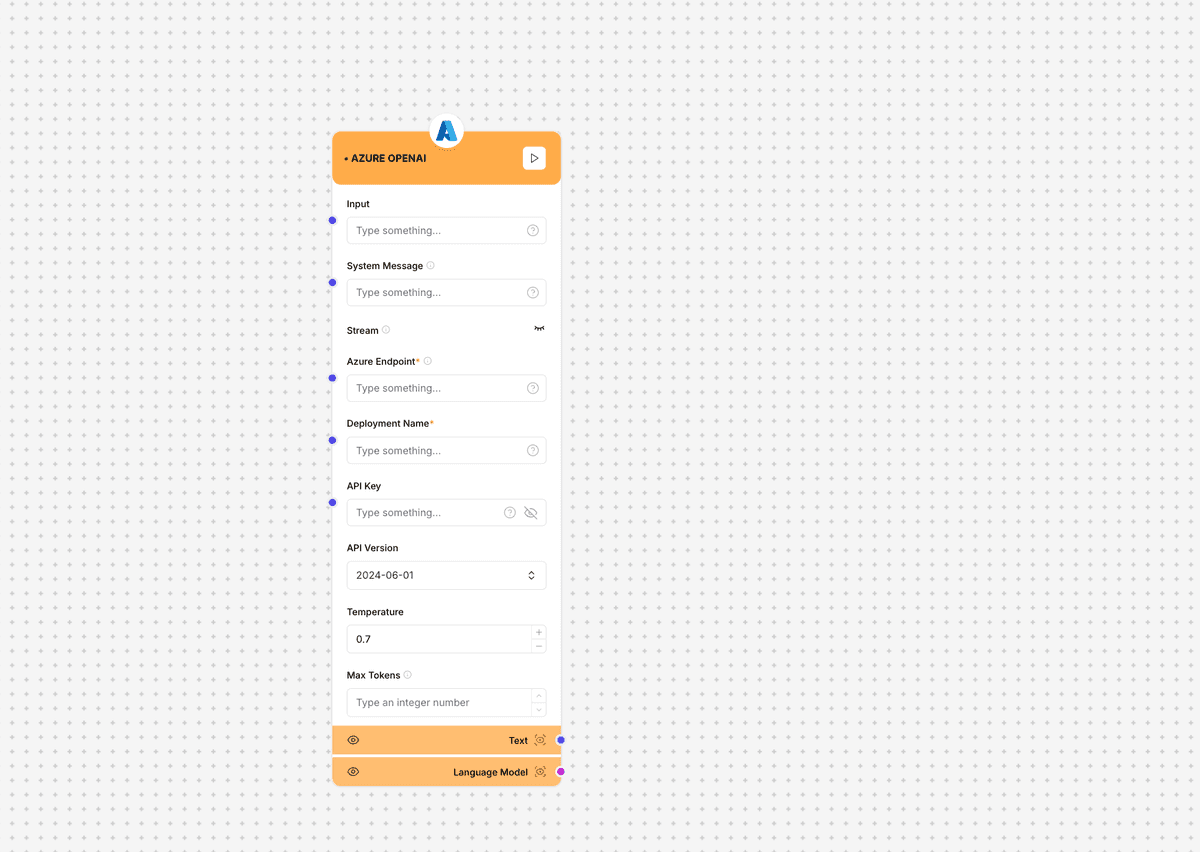

Azure OpenAI component interface and configuration

Azure Setup Required: This component requires a valid Azure OpenAI service setup. Ensure you have created the appropriate deployment and have the necessary permissions before using this component in production.

Component Inputs

- Input: Text input for the model

Example: "Explain quantum computing to a high school student."

- System Message: System prompt to guide model behavior

Example: "You are an educational assistant who explains complex topics using simple analogies."

- Stream: Toggle for streaming responses

Example: true (for real-time token streaming) or false (for complete response)

- Azure Endpoint: Your Azure OpenAI service endpoint

Example: "https://your-resource-name.openai.azure.com/"

- Deployment Name: Name of your model deployment

Example: "gpt-4", "gpt-35-turbo"

- API Key: Your Azure OpenAI API key

Example: "cdd78d98abcdef12345678910abcdef"

- API Version: Azure OpenAI API version

Example: "2024-06-01"

Component Outputs

- Text: Generated text output

Example: "Quantum computing is like a special kind of calculator that uses the weird rules of very tiny particles..."

- Language Model: Model information and metadata

Example: deployment: gpt-4, usage: {prompt_tokens: 35, completion_tokens: 120, total_tokens: 155}

Model Parameters

Temperature

Controls randomness in the generated text - higher values create more diverse outputs

Default: 0.7

Range: 0.0 to 2.0

Recommendation: Lower (0.0-0.3) for factual tasks, Higher (0.7-1.0) for creative applicationsMax Tokens

Maximum number of tokens to generate in the response

Default: Varies by model

Range: 1 to model maximum (e.g., 4096 for GPT-4)

Recommendation: Set according to expected response length and keep buffer for longer responsesImplementation Example

// Basic configuration

const azureOpenAI = {

azureEndpoint: "https://your-resource-name.openai.azure.com/",

deploymentName: "gpt-4",

apiKey: process.env.AZURE_OPENAI_API_KEY,

apiVersion: "2024-06-01",

systemMessage: "You are a helpful assistant."

};

// Advanced configuration

const advancedAzureOpenAI = {

azureEndpoint: "https://your-resource-name.openai.azure.com/",

deploymentName: "gpt-35-turbo",

apiKey: process.env.AZURE_OPENAI_API_KEY,

apiVersion: "2024-06-01",

maxTokens: 2000,

temperature: 0.5,

stream: true,

frequencyPenalty: 0.5,

presencePenalty: 0.0,

topP: 0.95

};

// Usage example

async function generateResponse(input) {

const response = await azureOpenAIComponent.generate({

input: input,

systemMessage: "You are an expert in finance.",

temperature: 0.3

});

return response.text;

}Use Cases

- Enterprise Applications: Build AI solutions with Microsoft's compliance and security standards

- Regulated Industries: Deploy AI in healthcare, finance, and government with proper data governance

- Content Creation: Generate marketing copy, documentation, and reports with enterprise controls

- Conversational Agents: Create enterprise-ready chatbots and virtual assistants

- Data Processing: Extract insights and summaries from documents with proper data residency

Best Practices

- Secure API keys using Azure Key Vault or environment variables

- Monitor usage and costs through Azure portal

- Implement proper error handling for API failures

- Choose appropriate deployment regions for data residency requirements

- Regularly update to the latest API versions for new features