Similarity Evaluator

The Similarity Evaluator is a powerful component that measures the semantic similarity between texts, helping ensure consistency and relevance in language model outputs. It provides detailed similarity metrics and analysis for text comparison.

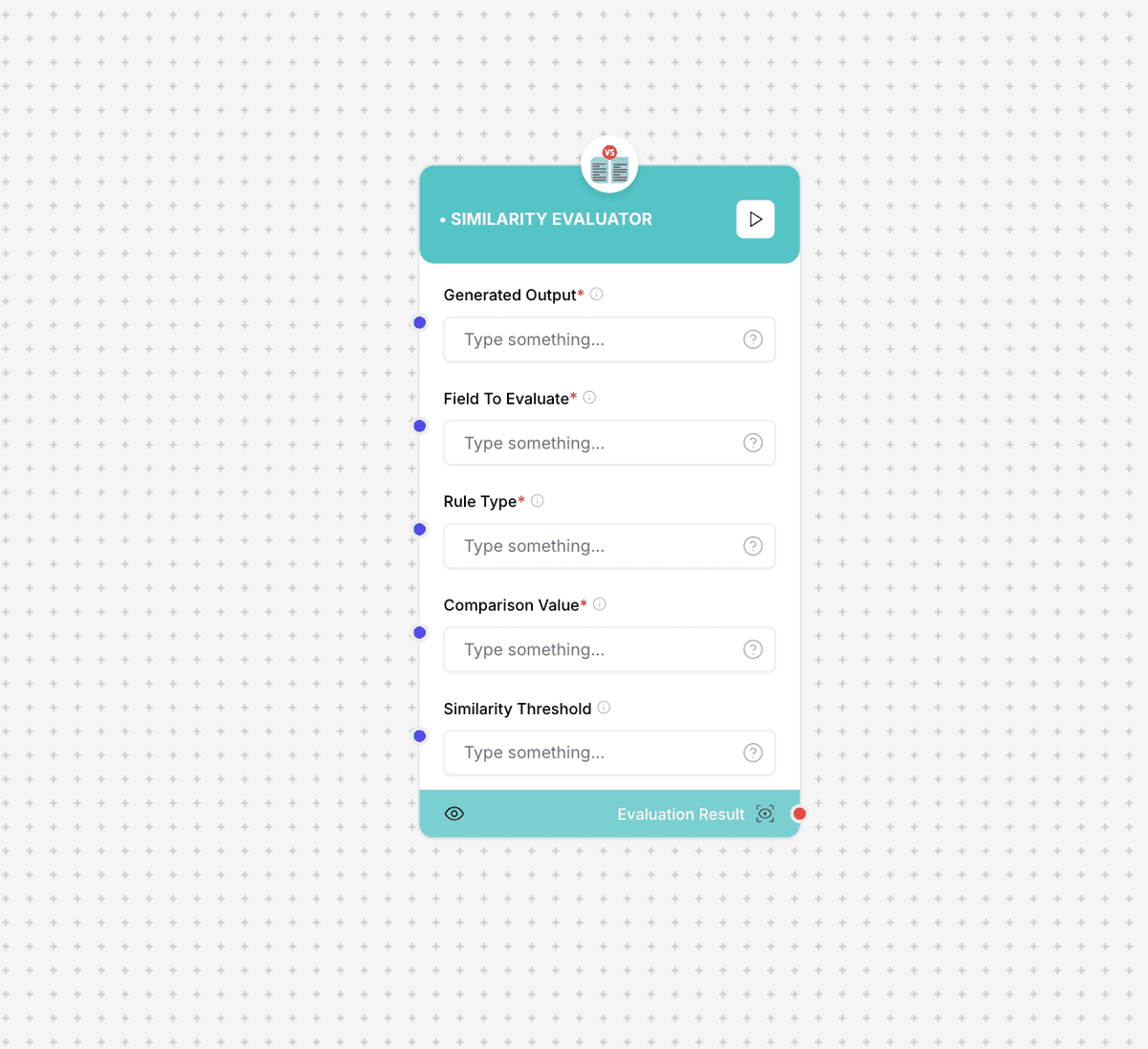

Similarity Evaluator interface and configuration

Usage Note: The similarity threshold should be carefully calibrated based on your specific use case. Different types of content may require different threshold levels for optimal results.

Component Inputs

- Generated Output: The text to evaluate

Example: "The generated response to compare"

- Field To Evaluate: Specific field for comparison

Example: "content", "summary", "description"

- Rule Type: Type of similarity comparison

Example: "semantic", "exact", "fuzzy"

- Comparison Value: Reference text for comparison

Example: "The reference text to compare against"

- Similarity Threshold: Minimum similarity score

Example: 0.8 (80% similarity required)

Component Outputs

- Similarity Score: Calculated similarity value

Example: 0.92 (92% similar)

- Evaluation Result: Pass/fail based on threshold

Example: true/false

- Analysis Details: Detailed comparison breakdown

Explanation of similarity assessment

How It Works

The Similarity Evaluator uses advanced natural language processing techniques to compare texts and determine their similarity. It can operate using different comparison methods depending on the requirements.

Evaluation Process

- Text preprocessing and normalization

- Feature extraction based on rule type

- Similarity calculation

- Threshold comparison

- Result analysis generation

- Detailed feedback compilation

Use Cases

- Content Matching: Compare generated content with references

- Response Validation: Verify response similarity to expected outputs

- Plagiarism Detection: Check for content uniqueness

- Quality Control: Ensure consistent response quality

- Content Verification: Validate content against standards

Implementation Example

const similarityEvaluator = new SimilarityEvaluator({

generatedOutput: "The quick brown fox jumps over the lazy dog",

fieldToEvaluate: "content",

ruleType: "semantic",

comparisonValue: "A swift brown fox leaps above a lazy dog",

similarityThreshold: 0.8

});

const result = await similarityEvaluator.evaluate();

// Output:

// {

// similarityScore: 0.92,

// passed: true,

// analysis: "High semantic similarity detected between texts..."

// }Additional Resources

Best Practices

- Choose appropriate similarity metrics for your use case

- Calibrate thresholds based on content type

- Consider language-specific requirements

- Regularly validate and adjust settings

- Use appropriate preprocessing for better results