Embedding Similarity

Calculate similarity between embedding vectors using various metrics. Support for cosine similarity, euclidean distance, dot product, and more with configurable options for different use cases.

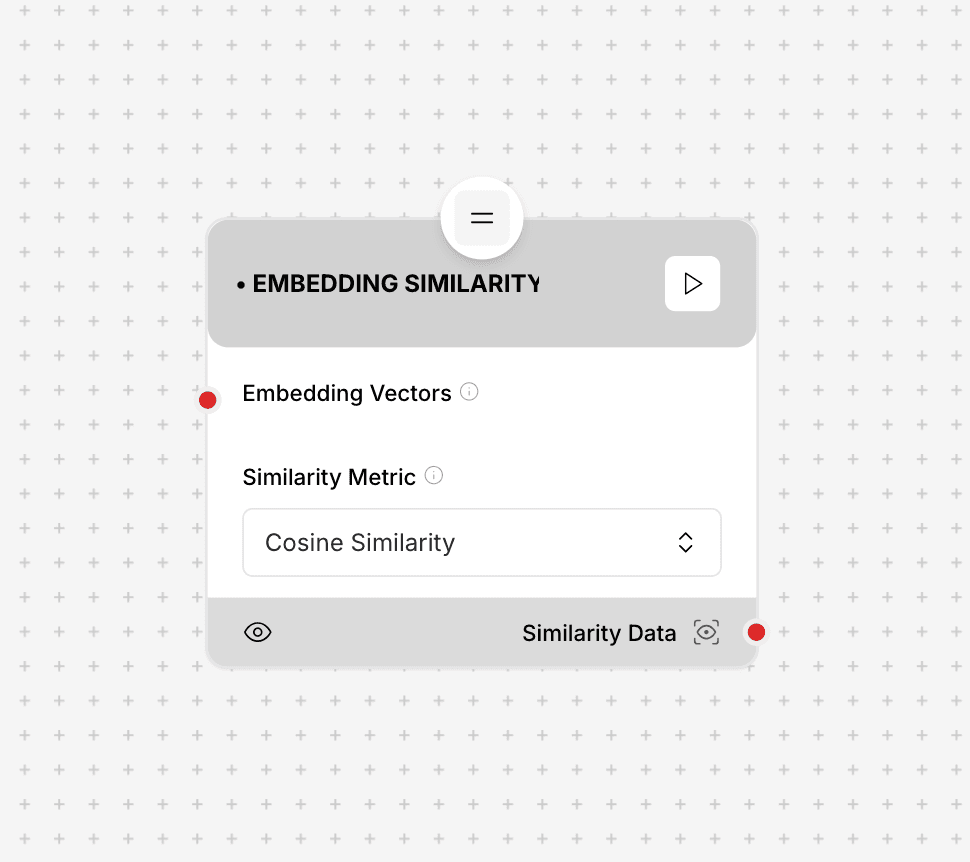

Embedding Similarity component interface and configuration

Dimension Matching: When comparing embedding vectors, ensure both vectors have the same dimensionality. Attempting to compare vectors of different dimensions will result in an error.

Component Inputs

- Metric: The similarity metric to use for comparison

Example: "cosine", "euclidean", "dot-product", "manhattan"

- Vector1: First embedding vector for comparison

Example: [0.1, 0.2, 0.3, 0.4, ...]

- Vector2: Second embedding vector for comparison

Example: [0.2, 0.3, 0.4, 0.5, ...]

- Source Vector: For batch comparisons, the reference vector

Example: [0.1, 0.2, 0.3, 0.4, ...]

- Target Vectors: For batch comparisons, array of vectors to compare against

Example: [[0.2, 0.3, 0.4], [0.3, 0.4, 0.5], [0.4, 0.5, 0.6]]

Component Outputs

- Similarity Score: Raw similarity value based on the selected metric

Example: 0.95 for cosine similarity

- Normalized Score: Normalized value between 0 and 1 (1 being most similar)

Example: 0.97 (normalized from the raw score)

- Metadata: Additional information about the comparison

Example: vector_dimensions: 768, comparison_time: 0.023

- Batch Results: For batch comparisons, array of similarity scores

Example: [0.95, 0.87, 0.76]

Metric Comparison

Cosine Similarity

Measures the cosine of the angle between two vectors, focusing on direction rather than magnitude

Range: -1 to 1 (normalized to 0-1)

Interpretation: Higher value means greater similarity

Ideal for: Text embeddings, semantic search

Note: Ignores magnitude differences, best for normalized vectorsEuclidean Distance

Measures the straight-line distance between two points in Euclidean space

Range: 0 to ∞ (normalized to 0-1, where 1 is closer)

Interpretation: Lower value means greater similarity

Ideal for: Physical or spatial data, when magnitude matters

Note: Sensitive to scale, consider normalizing input vectorsDot Product

Simple multiplication and summation of corresponding elements

Range: -∞ to ∞ (normalized to 0-1)

Interpretation: Higher value means greater similarity

Ideal for: Quick calculations, pre-normalized vectors

Note: Affected by both direction and magnitudeImplementation Example

// Calculate cosine similarity

const cosineSim = new EmbeddingSimilarity({

metric: "cosine"

});

// Compare two vectors

const result = await cosineSim.compare({

vector1: [0.1, 0.2, 0.3],

vector2: [0.2, 0.3, 0.4]

});

// Batch comparison

const batchResult = await cosineSim.compareBatch({

sourceVector: [0.1, 0.2, 0.3],

targetVectors: [

[0.2, 0.3, 0.4],

[0.3, 0.4, 0.5],

[0.4, 0.5, 0.6]

]

});

// Using different metrics

const euclideanSim = new EmbeddingSimilarity({

metric: "euclidean"

});

const dotProductSim = new EmbeddingSimilarity({

metric: "dot-product"

});

console.log(result.similarity.score); // Raw similarity score

console.log(result.similarity.normalized_score); // Normalized score between 0-1Use Cases

- Semantic Search: Find similar documents or content based on embedding similarity

- Recommendation Systems: Compare user preference embeddings with content embeddings

- Duplicate Detection: Identify similar or duplicate content

- Clustering: Group similar items based on embedding similarity

- Relevance Ranking: Sort search results by similarity to a query

Best Practices

- Normalize vectors when using cosine similarity to ensure consistent results

- Select the appropriate metric based on your specific use case

- For large-scale comparisons, consider using vector databases like FAISS or Pinecone

- Validate vector dimensions before comparisons to avoid runtime errors

- Use batch processing for multiple comparisons to improve performance