Vertex AI Embeddings

Generate high-quality embeddings using Google's Vertex AI platform. Features advanced parameter control, parallel processing, and enterprise-grade reliability for production deployments.

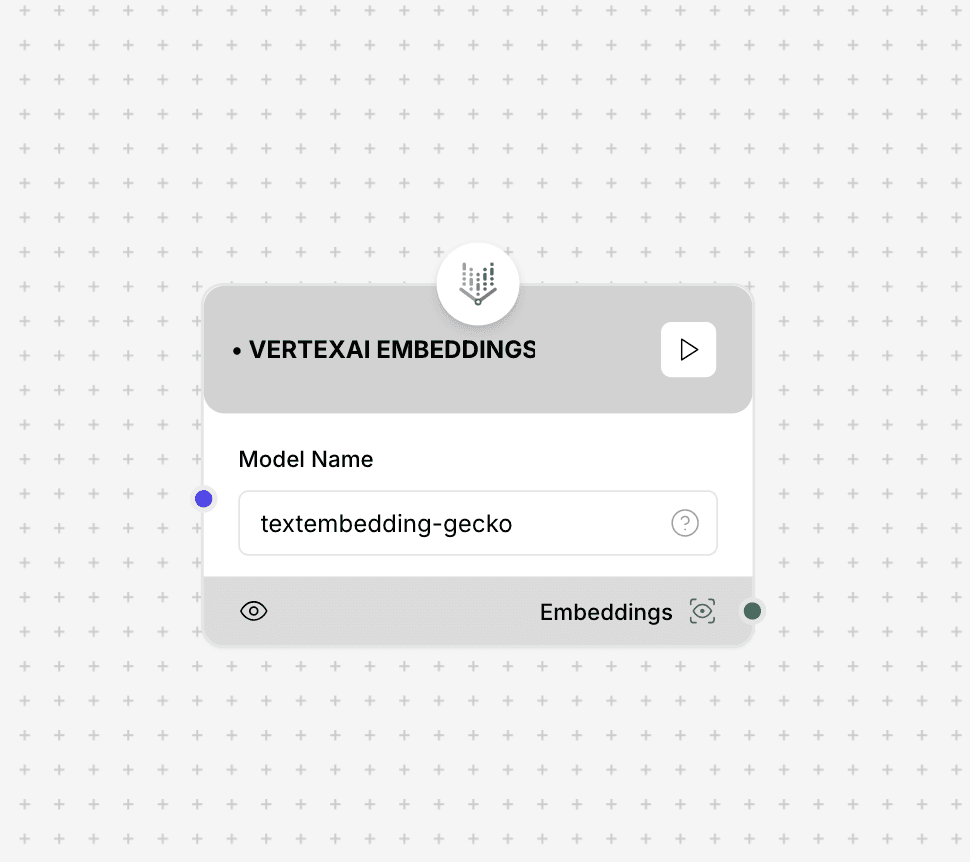

Vertex AI Embeddings component interface and configuration

Google Cloud Notice: This component requires valid Google Cloud credentials with appropriate permissions to access Vertex AI services. Ensure your service account has the required roles assigned before using this component in production.

Component Inputs

- Credentials: Google Cloud service account credentials

Example: client_email: service-account@project.iam.gserviceaccount.com, private_key: -----BEGIN PRIVATE KEY-----...

- Location: Google Cloud region where Vertex AI is deployed

Example: "us-central1", "europe-west4"

- Project: Google Cloud project ID

Example: "my-gcp-project-123456"

- Model Name: Vertex AI embedding model name

Example: "textembedding-gecko", "textembedding-gecko-multilingual"

- Max Output Tokens: Maximum number of tokens to generate

Example: 1024 (Default)

- Request Parallelism: Number of parallel requests to make

Example: 5 (Default)

Component Outputs

- Embeddings: Vector representations of the input text

Example: [0.015, -0.025, 0.056, ...]

- Dimensions: The dimension of the embedding vectors

Example: 768 for textembedding-gecko models

- Metadata: Additional information about the embedding request

Example: model: textembedding-gecko, token_count: 48

Model Comparison

textembedding-gecko

Google's standard text embedding model optimized primarily for English content

Dimensions: 768

Language Support: English-optimized

Context Length: Up to 3072 tokens

Ideal for: English content retrieval and semantic searchtextembedding-gecko-multilingual

Multilingual text embedding model supporting 100+ languages

Dimensions: 768

Language Support: 100+ languages

Context Length: Up to 3072 tokens

Ideal for: Cross-lingual applications and global content retrievalImplementation Example

// Basic configuration

const embedder = new VertexAIEmbeddor({

credentials: {

client_email: process.env.GCP_CLIENT_EMAIL,

private_key: process.env.GCP_PRIVATE_KEY

},

location: "us-central1",

project: process.env.GCP_PROJECT_ID,

modelName: "textembedding-gecko"

});

// Advanced configuration

const advancedEmbedder = new VertexAIEmbeddor({

credentials: {

client_email: process.env.GCP_CLIENT_EMAIL,

private_key: process.env.GCP_PRIVATE_KEY

},

location: "us-central1",

project: process.env.GCP_PROJECT_ID,

modelName: "textembedding-gecko-multilingual",

maxOutputTokens: 2048,

maxRetries: 5,

requestParallelism: 10,

temperature: 0.0,

topK: 40,

topP: 0.95

});

// Generate embeddings

const result = await embedder.embed({

input: "Your text to embed"

});

// Batch processing

const batchResult = await embedder.embedBatch({

inputs: [

"First text to embed",

"Second text to embed"

]

});

console.log(result.embeddings);Use Cases

- Google Cloud RAG Systems: Build retrieval systems within the Google Cloud ecosystem

- Enterprise Search: Create secure, compliant vector search for corporate content

- Multilingual Applications: Support global content with the multilingual model variant

- High-Volume Processing: Leverage parallel processing for high-throughput embedding generation

- AI Platform Integration: Seamlessly integrate with other Vertex AI services

Best Practices

- Store credentials securely using environment variables or secret management

- Use service accounts with minimal necessary permissions (principle of least privilege)

- Select the appropriate regional endpoint to minimize latency for your users

- Implement caching strategies to reduce redundant embedding calls

- Adjust request parallelism based on your application's performance requirements